Geotimes

Technology

Earthquake warning tools

Josh Chamot

For as long as earthquakes have been a part of human history, the ability to

forecast a seismic event has been an elusive goal. Factors leading up to an

earthquake are incredibly complex and not yet completely understood; hence,

no reliable forecast method exists.

“Forecasting is considered a very hard problem,” says Kate Hutton,

a seismologist at Caltech. But she and other researchers are accepting the challenge

and are using recent advances in seismic and computational technology to attempt

to decipher Earth’s subtle clues. They are not seeking to predict, but

rather to provide enough early warning to prevent fatalities through a number

of emerging technologies.

By simulating

fault systems and analyzing motion on the finest scales, earthquake researchers

hope not only to learn more about motions in the planet’s crust and mantle,

but also to see initial hints or patterns that could reveal a pending tectonic

event. In the future, data resolution will be so high that monitoring sensors

may be able to rapidly assess damage and the location of greatest impact —

all at a speed necessary to direct an emergency response.

By simulating

fault systems and analyzing motion on the finest scales, earthquake researchers

hope not only to learn more about motions in the planet’s crust and mantle,

but also to see initial hints or patterns that could reveal a pending tectonic

event. In the future, data resolution will be so high that monitoring sensors

may be able to rapidly assess damage and the location of greatest impact —

all at a speed necessary to direct an emergency response.

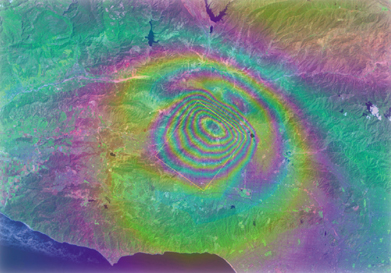

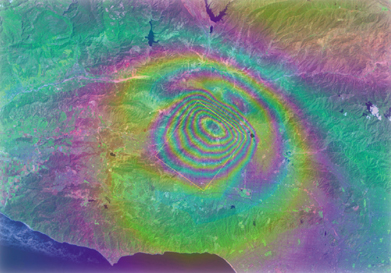

Simulation of deformation of the Earth’s

surface 500 years after the 1994 Northridge earthquake, generated by GeoFEST,

one of the QuakeSim simulation tools under development. Deformation is represented

as color fringes with each fringe representing elevation changes of 5.6 centimeters.

Federal agencies and universities have conceived a number of integrated simulation

and monitoring programs, and several of the largest are now coming online or

evolving to new levels. Three major initiatives demonstrate this trend on three

different levels: QuakeSim, principally managed by NASA’s Jet Propulsion

Laboratory at Caltech and researchers at several universities, is a large-scale

earthquake modeling and simulation program; TriNet, a collaborative effort between

Caltech, the U.S. Geological Survey (USGS) and the California Geological Survey

(CGS), is a local earthquake monitoring network; and the USGS Advanced National

Seismic System (ANSS) is a national earthquake monitoring network.

Simulation

Using data gathered through ground- and space-based measurements and high-powered

grid computing systems, QuakeSim is a computer modeling system that will help

researchers better understand the processes that lead up to earthquakes and

aftershocks. QuakeSim does not forecast, but instead is a way to bring technology

to bear on the complexity of earthquakes and other tectonic processes, including

volcanism. The system’s models may also reveal the patterns that emerge

before, during and after main events.

“The objective of QuakeSim is to understand the earthquake process through

integration of models of different scales,” says Andrea Donnellan of the

NASA Jet Propulsion Laboratory, who is principal investigator for the project.

“We are focusing on using long-term deformation data to model the process,”

with researchers able to access the information via the Web, she says.

The source data include information from space-derived GPS and InSAR data, as

well as land-based laboratory and field geologic data. The resulting simulation

has three computer tool components — PARK, GeoFEST, and Virtual California

— each producing results that should be compatible with many existing and

future research initiatives. Although QuakeSim is still a work in progress,

its programmers have tested the performance of all three programs and devised

strategies for how the programs will work together to model earthquake dynamics.

They have also begun developing a framework for making the data accessible to

numerous researchers at distant locations.

When complete, geophysicists and modelers will use PARK to study fault slip

on several spatial and temporal scales at the heavily researched Parkfield segment

of the San Andreas fault in California. They will be able to observe the entire

earthquake life cycle, analyzing slip (including non-quake motions), slip history

and stress at a given point along a fault, while simultaneously incorporating

information about every other point — potentially revealing earthquake

warning signals. To digest the complicated algorithms, 1,024 computer processors

will work together in parallel.

Complementing the data from PARK, GeoFEST will model the evolution of stress

and strain in a detailed simulation of the crust and mantle, potentially helping

researchers predict the deformation from an earthquake or a series of quakes.

The results are 2-D and 3-D models, eventually capable of analyzing much of

the Southern California fault system, an area roughly 1,000 kilometers (621

miles) on a side.

The third QuakeSim tool, Virtual California, will simulate interactions between

hundreds of faults, ultimately correlating factors that may predict large earthquakes,

particularly events of magnitude 6.0 or greater. Large earthquakes are capable

of rupturing multiple fault systems. Modelers can correlate the simulations

to real data, and they are already putting this tool to the test. Geophysicists

used Virtual California to predict the regions of the state with elevated quake

probabilities. Since 2000, all of the region’s earthquakes of magnitude

5.0 or greater occurred within 6.8 kilometers of predicted sites.

Knowledge gained from QuakeSim may help refine the results of TriNet and ANSS,

two systems already processing enormous amounts of data.

Local monitoring

Southern California experiences nearly 30 earthquakes every day. While most

quakes are not severe, the seismic activity provides a vibrant monitoring environment.

Funded since 1997, TriNet is an attempt to create an advanced real-time earthquake

information system for Southern California; it is now part of the larger California

Integrated Seismic Network (CISN).

The biggest current development for TriNet is its participation in CISN, says

Hutton, a member of the TriNet team. They are merging the data from several

of the largest seismic networks into a common database. “Besides making

the data more easily accessible to researchers and users, it also makes it possible

for northern California to back up southern California, and vice versa, in the

case of a really big one,” she explains.

TriNet built on the existing Southern California Seismic Network, a configuration

of more than 200 seismic monitoring stations that have been operated since the

1960s by Caltech and USGS. TriNet brought the network up to speed with the addition

of digital instruments and real-time processing and transmission. CGS has been

upgrading strong-motion monitoring systems at 400 sites in the California Strong

Motion Instrumentation Program, and USGS has been upgrading the 35 Southern

California-based sensors that are part of the network of 571 in the National

Strong Motion Program.

The data from all sensors travel to a central processing facility at Caltech

via digital phone lines, radio, microwave links and the Internet. Researchers

immediately analyze the data to determine where the quake occurred, its size,

the distribution of the shaking and the specific fault. Scientists participating

in CISN are wired to the system through paging and Internet, with Web access

to high-quality, high-density research data for long-term research projects.

TriNet utilizes two types of seismometers: strong-motion accelerometers collect

ground motion data in violently shaken areas for seismological and engineering

data, and broadband seismometers record small and large seismic events.

Seismic monitoring stations collect data in both analog and digital formats.

Analog stations pass information via telephone lines and computer processors

at the processing center where data are converted into digital information.

However, the electronics and phone lines have a limited information range, so

data can go off the scale and get lost. Still, analog stations are easier to

maintain because they are simpler, and much of the hardware is at the central

processing center.

Digital stations have both high- and low-gain sensors, which allow data to stay

in scale no matter how large or small. They use 24-bit digitizers to convert

motion into a digital signal on site and send information via digital link to

the central processing station for immediate use. The benefit of digital is

that it can provide information for small and large quakes, and researchers

can check the data for error, confirming that line noise has not corrupted any

of the information.

In total, TriNet incorporates 150 broadband sensors, 600 strong-motion sensors

and a central processing system designed to function at least in part if an

earthquake occurs in the vicinity. The data will yield an earthquake catalog

for activity at magnitude 1.8 and above, rapid descriptions of the events, a

ground motion database for greater than magnitude-4.0 events, frequency-dependent

ground motion maps, and integrated map data called ShakeMaps — automatically

generated maps that show ground-shaking intensity. Such products provide assistance

to the government, fire and rescue teams, utility companies, and the media so

that they can all respond to an earthquake crisis. But TriNet could perhaps

go a step further — to early warning.

“TriNet is on the cutting edge of seismicity monitoring,” Hutton says.

“Through modern computer and communications technology, seismic stations

close to the epicenter of a big quake warn that the P and S waves are actually

on the way.”

This type of early warning could provide several tens of seconds of notice before

a quake hits a given area — perhaps long enough to implement some responses

and allow people to secure their immediate surroundings. Using CISN stations,

the Pilot Seismic Computerized Alert Network will be able to recognize when

an earthquake outside of a populated area is in progress and send notification

to scientists and emergency personnel in nearby residential areas before shaking

arrives.

National monitoring

TriNet has provided researchers with new ideas to take to the national level.

When complete, the USGS will network 7,000 sites on the ground and in monitored

buildings at sites across the nation with ANSS — providing emergency personnel

with real-time information and recording scientific and engineering datasets

for both geological and engineering research.

“This system will upgrade our current monitoring capabilities through the

installation of new instrumentation and the integration of existing monitoring

efforts,” says Paul Earle, a research geophysicist at the USGS National

Earthquake Information Center. “ANSS will provide emergency response agencies

with information about the intensity of shaking and engineers with data to design

better buildings.” Scientists working to understand earthquake physics

can also use the data to improve forecasting abilities. “Earthquake monitoring

impacts many aspects of seismology and public safety beyond earthquake forecasting,”

he says.

In scope and scale, “ANSS is the authoritative seismic monitoring system

for the U.S.,” says Mitch Withers, research associate professor at the

Center for Earthquake Research and Information (CERI) at the University of Memphis.

ANSS goes beyond California, reaching Alaska, Hawaii, Puerto Rico and the continental

interior. Regional centers across the country will link the monitoring network

to local institutions. For example, the mid-America regional processing center

at CERI links university partners from Missouri to Virginia and processes more

than 700 data channels. Institutions exchange data in near real-time, although

current automated earthquake information is only available for the active part

of the New Madrid seismic zone.

“Given recent developments by the USGS, we are working to provide this

information for the entire region. The ANSS philosophy is to provide information

as quickly and reliably as possible and to continuously improve and reduce the

necessary [warning] time,” Withers says.

ANSS works on a tiered system in which unstaffed computers feed data to regional

processing centers, which in turn feed the national center. The regional and

national centers act as backups to each other, and local experts guide the process.

According to Withers, existing systems use multi-station procedures to produce

alarms for broad regions, although critical facilities use single-station triggers.

“Research is ongoing within the seismology community to develop faster

algorithms with reduced data requirements,” Withers says. However, implementing

such algorithms is difficult, he says. “Early warning is conceptually quite

simple but nontrivial to implement reliably and without false alarms.”

In addition to the variety of data outputs, the information will yield additional

ShakeMaps, which are now active in California, Seattle and Salt Lake City, and

soon to come in Anchorage. Planners hope that ANSS will organize, modernize,

standardize and stabilize seismic monitoring in this country. The program, however,

is currently funded at only 10 percent of the approximately $150 million the

U.S. Congress authorized in 1999. If full funding is secured, researchers will

install 6,000 new instruments in urban networks, 1,000 in regional networks,

and 44 in minimal-risk areas to complete a national monitoring net. Two portable

25-station arrays will study aftershocks and earthquake hazards directly at

active sites, although permanent stations will conduct the bulk of monitoring

duties.

Despite myriad earthquake monitoring projects, these technologies can only go

so far. “Still, the best way to save lives and property,” Earle says,

“is to be prepared for an earthquake by building better structures and

having a quick and reliable emergency response system in place.”

Chamot is a freelance writer

based outside of Washington, D.C., and a frequent contributor to Geotimes.

By simulating

fault systems and analyzing motion on the finest scales, earthquake researchers

hope not only to learn more about motions in the planet’s crust and mantle,

but also to see initial hints or patterns that could reveal a pending tectonic

event. In the future, data resolution will be so high that monitoring sensors

may be able to rapidly assess damage and the location of greatest impact —

all at a speed necessary to direct an emergency response.

By simulating

fault systems and analyzing motion on the finest scales, earthquake researchers

hope not only to learn more about motions in the planet’s crust and mantle,

but also to see initial hints or patterns that could reveal a pending tectonic

event. In the future, data resolution will be so high that monitoring sensors

may be able to rapidly assess damage and the location of greatest impact —

all at a speed necessary to direct an emergency response.