Geotimes

Untitled Document

Feature

Earthquakes: Predicting

the Unpredictable?

Susan E. Hough

Sidebars:

Warning: Tsunami

The earthquake record Print exclusive

Ernest Rutherford, Nobel Prize winner in chemistry in 1908, observed that:

“All science is either physics or stamp collecting.” While this assessment

may be an affront to scientists of many persuasions, most scientists have an

appreciation for the sentiment: Modern science is expected to go beyond genteel

sorts of observations and descriptions of phenomena (“stamp collecting”)

and explore the reasons why things are the way they are (“physics”).

If there is a single litmus test for modern science, it is whether possessing

understanding provides not only a description of past events but also the basis

for prediction of the future.

Prediction is an area of frustration to seismologists. Our inability to predict

events, after all, can lead to future disasters, as the world just witnessed,

when a powerful earthquake struck in the Indian Ocean, causing a tsunami that

left hundreds of thousands dead (see sidebar).

The science, however, is not without predictive capability. We can now, for

example, predict with a fair measure of certainty how the ground will shake

during an earthquake on a given fault. But predicting exactly when and where

an earthquake will strike remains an elusive beast, always hiding just around

the next corner. This inability generates frustration among the public as well.

In contrast to economics, where predictions are constantly wrong and right in

equal measure, people look to earthquake scientists to take away the harrowing

element of unpredictability, and tend to apply a zero-tolerance standard to

emerging earthquake prediction efforts.

Waves of optimism

The earthquake prediction pendulum has swung from optimism in the 1970s to rather

extreme pessimism in the 1990s. Earlier work revealed evidence of possible earthquake

precursors: physical changes in the planet that signal that a large earthquake

is on the way. Using such precursors, the 1975 Haicheng earthquake in China

was predicted, saving untold thousands of lives. The prediction was almost entirely

based on an unusually prodigious sequence of foreshocks over several months

prior to the mainshock. When the magnitude-7.8 Tangshan earthquake struck northern

China, not far from Beijing, a year later, it had no such foreshock sequence,

and was not predicted. Estimates of the death toll from that event range from

250,000 to as high as 750,000.

Over time, scientists grew increasingly discouraged about relying on these precursors.

At the same time, a new scientific paradigm emerged and did not bode well for

earthquake prediction: chaos theory. According to these theories, large physical

systems behave in complex and often unpredictable ways. A small disturbance

in a sand pile, for example, can cause either a small avalanche or a large one,

depending on innumerable factors that can never be known in advance.

Some respected earthquake scientists argued that earthquakes are likewise fundamentally

unpredictable. The fate of the Parkfield prediction experiment appeared to support

their arguments: A moderate earthquake had been predicted along a specified

segment of the central San Andreas fault within five years of 1988, but had

failed to materialize on schedule.

Other scientists perhaps remained more open-minded, but concluded nonetheless

that research dollars would be better spent on studies to mitigate earthquake

hazards. From a practical standpoint, even if earthquakes could be predicted,

buildings and other infrastructure would still have to withstand the shaking.

A dearth of research focused specifically on prediction led to a vacuum that

was filled to some extent by pseudo-science — research done by individuals

on the fringes or outside of the scientific community. Earthquake prediction

soon earned itself a bad name, and reputable seismologists avoided use of the

“P-word” in both polite company and in proposals.

At some point, however, the pendulum began to swing back. Reputable scientists

began using the “P-word” in not only polite company, but also at meetings

and even in print. No single profound breakthrough stoked this newfound, yet

cautious, optimism. Specific earthquake prediction, involving narrow windows

in time, place and magnitude range, remains as elusive as ever. Even when the

Parkfield prediction experiment bore fruit with the occurrence of a magnitude-6

earthquake on Sept. 28, 2004, it was clear that scientists had been unable to

accurately predict the precise timing of even this anticipated earthquake.

The earthquake cycle

If the optimism

regarding earthquake prediction can be attributed to any single cause, it might

be scientists’ burgeoning understanding of the earthquake cycle. The concept

of a cycle dates back to G.K. Gilbert’s pioneering and visionary work in

the late 19th century. Somewhat remarkably, before the association between faults

and earthquakes was clearly understood, Gilbert wrote of a process whereby earthquakes

would release strain in Earth, and would happen again only after sufficient

time had elapsed for the strain to rebuild. Harry Fielding Reid formalized this

concept with the development of elastic rebound theory in the aftermath of the

1906 San Francisco earthquake, which killed 3,500 people.

If the optimism

regarding earthquake prediction can be attributed to any single cause, it might

be scientists’ burgeoning understanding of the earthquake cycle. The concept

of a cycle dates back to G.K. Gilbert’s pioneering and visionary work in

the late 19th century. Somewhat remarkably, before the association between faults

and earthquakes was clearly understood, Gilbert wrote of a process whereby earthquakes

would release strain in Earth, and would happen again only after sufficient

time had elapsed for the strain to rebuild. Harry Fielding Reid formalized this

concept with the development of elastic rebound theory in the aftermath of the

1906 San Francisco earthquake, which killed 3,500 people.

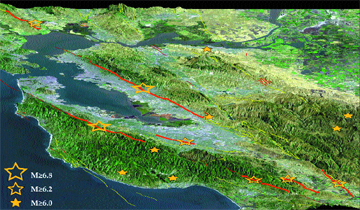

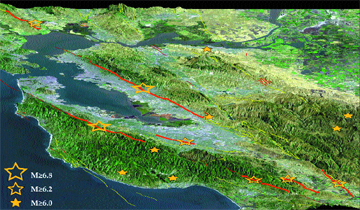

During the 75 years before the great 1906 earthquake on the San Andreas Fault,

which killed approximately 3,500 people, the San Francisco area suffered at

least 14 shocks greater than magnitude 6.0 on all its major faults, including

two events greater than magnitude 6.8. During the succeeding 75 years, there

was only one shock larger than magnitude 6.0. The release of stress building

up to the larger earthquake could shed light for seismologists trying to develop

earthquake patterns for local regions, as Ross Stein and colleagues reported

in Nature in 1999. Courtesy of R. S. Stein, Nature, 402, pp. 605-609,

1999.

The plate tectonics revolution of the mid-20th century led to the realization

that most of the planet’s large earthquakes occur along boundaries between

plates. The motion of the plates, driven by convection in the mantle, provides

the engine that drives the earthquake cycle. This understanding led to the “seismic

gap theory”: If motion at plate boundaries is accommodated by earthquakes,

then future large earthquakes can be expected along plate boundary segments

that are conspicuously quiet. Although the details of this theory have been

debated, the idea has apparently been vindicated as scientists have teased out

the earthquake histories of large plate boundary faults (see sidebar in Geotimes

print edition).

Seismic gap theory does not, however, lead immediately to prediction. Large

earthquakes on a given segment of the San Andreas Fault might recur, say, every

150 years on average, but if, as geologic data suggest, individual pairs of

earthquakes are anywhere from 100 to 200 years apart, prediction is clearly

hopeless. Indeed, scientists’ efforts through the late-20th century focused

on the business of long-term earthquake hazard assessment rather than prediction.

Hazard assessments use probabilities to highlight those zones where earthquakes

are likely to occur in a given time period, based on past activity. Such efforts

have been implemented with increasing sophistication in recent years, on a national

level by the U.S. Geological Survey and in California by a collaboration with

the California Geological Survey.

In parallel with these efforts to predict long-term hazards, some scientific

studies have shed further light on the details of the earthquake cycle. The

best promise for prediction lies in intermediate-term prediction: identification

of regions or faults where earthquakes are likely to occur within a few months,

years or, at most, decades.

Path to prediction

One promising line of research is finding that large earthquakes recur especially

regularly along some plate boundary segments, as illustrated by the Parkfield

prediction experiment. Although the 2004 temblor was late, its location and

magnitude were precisely what scientists had said it would be (see Geotimes

Web Extra, Sept. 28, 2004). The value of the prediction in this case was

not so much its warning to the general public but rather its impetus to scientists

to install sophisticated monitoring equipment to catch the earthquake “red-handed.”

Monitoring has expanded recently with the San Andreas Observatory at Depth experiment,

which is drilling into the heart of the active fault. Among the objectives is

to better understand the small earthquakes that recur on the fault almost like

clockwork.

In other regions, for example parts of Japan and perhaps the Hayward Fault in

San Francisco, the long-term regularity of earthquakes might allow scientists

to make meaningful predictions of potentially damaging earthquakes that are

likely to occur within the next few decades. Intermediate-term predictions would

be of significant value in regions such as San Francisco, as they would identify

regions where hazard mitigation efforts (for example, retrofitting of vulnerable

structures) should be focused. One might also imagine future intermediate-term

prediction experiments designed to capture expected large earthquakes.

Another area of research, which is both intriguing and controversial, looks

at evidence that earthquake activity over a given region can signal the approach

of a large earthquake. Some studies suggest that an impending large earthquake

is heralded by an increase in moderate earthquakes in the region surrounding

the fault, over a timescale of years to at most a couple of decades.

One conceptual view of this phenomenon is that the rate of moderate earthquakes

is suppressed after a large earthquake and returns to “normal” prior

to the next large earthquake. A different view is that the rate of moderate

earthquakes increases as the crust is strained around an impending rupture,

just as a flexed pencil might start to crack around the area that will eventually

break. Seismologists describe such a phenomenon as “accelerating moment

release” — an increase in the rate of energy released by earthquakes.

The accelerating moment release concept is not new, as prediction schemes have

been developed around this idea since the 1980s. However, since the 1990s, scientists

have developed an increasingly sophisticated theoretical and computational framework

to further explore the concept. In particular, we are now able to use computer

programs to predict not only how one earthquake will change the stress in the

neighboring regions of the crust, but also how an impending earthquake will

affect the crust.

To date, regions of accelerating moment release have been identified after large

earthquakes have occurred. Knowing the time and place of a large earthquake

allows scientists to look back and identify the pattern. The trick, of course,

is to predict earthquakes before they happen. This requires analysis of ongoing

earthquake patterns and identification of regions where the rate of earthquakes

is increasing.

Unfortunately, an increasing rate of earthquakes does not always signal an impending

large event. For this and other reasons, such investigations generally remain

in the realm of research science — not yet mature enough to provide the

basis for earthquake prediction. Still, a few researchers have been so bold

as to identify patterns before the fact and suggest that a large earthquake

might be imminent.

In recent years, for example, a group of scientists at the University of California,

Los Angeles, led by Vladimir Keilis-Borok made headlines with a new twist on

the method. Looking back at previous large earthquakes in several regions, Keilis-Borok’s

team identified a pair of patterns that they considered to jointly represent

a reliable precursor. Increasing moment release was one of the patterns; the

other was the appearance of a cluster of moderate earthquakes stretching over

a specified fault length and within a specified time span. When both of these

criteria are met, a prediction is issued for the region around the cluster,

lasting for nine months.

Keilis-Borok’s group circulated initial predictions privately among colleagues.

Although they have not published full results, two predictions appear to have

been fulfilled by large earthquakes in northern Japan and central California

in late 2003. Several subsequent predictions, however, including one for a large

earthquake in southern California in 2004, garnered substantial attention from

the media as well as the scientific community, but proved to be failures (see

Geotimes Web Extra, Sept. 7, 2004). No prediction

had been made by any researchers prior to the devastating Sumatra earthquake

of late 2004.

Future chatter

The highly publicized recent failure of the Keilis-Borok prediction and the

qualified (and belated) success of the Parkfield prediction perhaps illustrate

where seismologists stand in our quest for earthquake prediction: We are quite

good at identifying where large earthquakes are likely to occur on time scales

of several decades to centuries, but still unable to identify regions where

earthquakes will happen tomorrow, next week, or even within the next few years.

For this to change, scientists would have to identify a precursor that reliably

signals an impending large earthquake.

An intriguing new candidate did emerge at the close of 2004, when Robert Nadeau

and David Dolenc of the University of California, Berkeley, identified “tremors”

— a sort of seismological chatter — on the San Andreas fault near

Parkfield, below the part of the fault where earthquakes occur. Bursts of tremors

may portend future earthquakes, perhaps the signature of fluid migration in

the deep crust.

It is too early to know where these new signals might lead us, but the search

for the Holy Grail continues.

Warning:

Tsunami

|

| Before:

Lhoknga, a village near the capital city of Banda Aceh, in the Aceh

province on the western coast of Sumatra is lush and developed in

this satellite picture taken on Jan. 10, 2003. A white-colored mosque

is in the center of the town. After:

Taken Dec. 29, 2004 — three days after the tsunami hit the western

coast of Sumatra — this image shows the complete destruction

of Lhoknga, with the exception of the white mosque. The Dec. 26 tsunami

washed away nearly all the trees, vegetation and buildings in the

area. Images courtesy of Space Imaging/CRISP-SingaporeCalifornia,

Berkeley. |

|

In the aftermath of the Dec.

26 earthquake and tsunami in the Indian Ocean, officials from a dozen countries

pledged to establish a tsunami warning system there. At about the same time,

the United States proffered more than $35 million to beef up the tsunami

warning system in the Pacific Ocean, while also expanding it to the Atlantic

Ocean and the Caribbean. But predicting disaster is always tricky, and warning

communities about an imminent event may be even harder. For extreme events

that happen relatively rarely, such as earthquakes and tsunamis, keeping

attention and technology focused on the possibility of a disaster becomes

key.

In the United States, concerns linger over the efficacy of the current Pacific

warning system, a model for the proposed system in the Indian Ocean. Since

1968, a consortium of countries under UNESCO has operated the system in

the Pacific Ocean, spurred by the 1964 Good Friday earthquake and subsequent

tsunami that hit Anchorage, Alaska, and killed more than 120 people. Tracking

tsunamis hinges on tracking the earthquakes that generally trigger the big

events, and so data from the U.S. Geological Survey (USGS) and other seismology

sources are the bedrock of the Pacific system.

Studying the oceans is important as well for tsunami warning. The National

Oceanic and Atmospheric Administration (NOAA) established science research

efforts to model and predict tsunamis, backed up by a system of buoys and

coastal water-level gages to track ocean waves, in hopes of detecting the

several-centimeter swells of oncoming waves or surges before they strike.

In the past, NOAA has made announcements successfully forecasting either

that a tsunami will hit Pacific shorelines or won’t after major earthquakes.

Barry Hirshorn of the Pacific Tsunami Warning Center in Hawaii says that

their biggest success came in 2001, when a magnitude-8.4 earthquake struck

offshore Peru; even though they could not warn in time local Peruvian communities,

who were inundated by a large tsunami, the center could assure other locations

in the Pacific that the destructive wave would not reach them.

But of the six buoys deployed in 2000, known as DART (for Deep-ocean Assessment

and Reporting of Tsunamis), only three currently function; the other three

have broken down and are awaiting repairs. “We have a skeletal system,”

says Yumei Wang, a geotechnical engineer at the Oregon Department of Geology

and Mineral Industries.

The current system is set up to recognize large, distant offshore earthquakes,

such as the 1964 Alaska quake, and then track subsequent tsunamis, Wang

says. Because these tend to originate far from the Pacific Northwest coast,

residents would have longer lead times for those events. She says that “one

of the downfalls of the current warning system is that it won’t help

on local tsunamis in Cascadia,” a subduction zone where the oceanic

plate is diving beneath the continental plate. A tsunami caused by a local

offshore earthquake would give nearby coastal residents only minutes to

escape (which is analogous to the situation in Sumatra).

A system to warn Oregon and Washington residents would require an accelerometer

network and other devices “that measure ground motion and quickly analyze

it to tell what kind of earthquake it is,” Wang says. Such a system

would identify in real time whether it is a very deep earthquake, well beneath

the contact between the two plates — and therefore not a threat —

or a shallow offshore one that could push ocean water or cause a landslide

that would trigger a local tsunami. But such a system would take “a

lot of money,” she says. Even so, the Pacific tsunami warning system

is “magnitudes [better] than not having a system at all.”

John Orcutt of Scripps Institution of Oceanography, a San Diego-based research

institution, says that part of the problem “is lack of attention and

funding in between hazards.” Interest in the Indian Ocean, in particular,

is in danger of waning, where the tsunami threat is on a century scale,

rather than the more frequent events of the Pacific. Thus, effective, sustained

education and communication are paramount, in addition to the science.

Indeed, while seismologists in Colorado and Hawaii saw the Dec. 26 Sumatra

earthquake and could guess a tsunami might be possible, they had no one

to tell, says Michael Glantz of the Environmental and Societal Impacts Group,

at the National Center for Atmospheric Research, in Boulder, Colo. “To

do just the science, the technological side, is like clapping with one hand.”

Wang suggests that simple warning signs on beaches and in hotel rooms might

have helped save lives in the Indian Ocean tsunami, and such an effort is

exactly what coastal cities must do in Oregon, Washington and California.

“You need a community-level mitigation plan, not just instruments,”

she says. Orcutt suggests setting up a system of e-mails, text messages

sent directly to cell phones, and other communication trees that could be

enacted but haven’t been.

“Building an early warning system technologically is not hard. [But]

you have to make sure that it’s useable, that someone gets it. You

may even have to make sure they use it,” Glantz says. In addition,

he says, the governments that pledge to establish a warning system today

must be willing to support it for decades, even if a tsunami doesn’t

come.

At the January U.N. meeting on disaster reduction, Japan, the United States

and others promised to help establish a tsunami warning system for the Indian

Ocean. UNESCO estimated it would take $30 million initially, with maintenance

costs of $2 million a year, to get a system going by June 2006, with hopes

of establishing a “worldwide alert system” the following year.

In the United States, the Bush administration’s commitment of $37.5

million to technologically improve the national system will be a funding

allocation that Congress will debate in the coming year. More than 20 buoys

would be added to the Pacific with the funding, which would also go toward

deploying half a dozen buoys in the Atlantic and upgrading and expanding

the global seismic observation network.

Although the risk is small on the U.S. eastern seaboard, researchers from

USGS, the Woods Hole Oceanographic Institute and elsewhere have found that

large earthquakes on the Puerto Rico and Hispaniola subduction trenches,

where one plate rides onto another, or an underwater landslide could potentially

trigger a tsunami that would inundate local shorelines and possibly the

East Coast. (One model of such a scenario was published on Dec. 24 in the

Journal of Geophysical Research, two days before the Indian Ocean

tsunami struck.) Further north, an earthquake-triggered submarine landslide

did just that in 1929, inundating Grand Banks, Newfoundland, with a tsunami

that killed 27 people.

But all current tsunami models still need real-time data to calibrate their

results, scientists say. They also need more mapping of coastal shores,

for both determining past tsunami run-ups and improving bathymetry and land

topography for 3-D modeling.

And no matter where they are, tsunami warning systems have to be affordable

if they are to survive and help people survive. “You have to think

in the long-term,” Orcutt says.

Naomi Lubick |

Hough

is a seismologist with the U.S. Geological Survey in Pasadena, Calif. She is also

co-author of the upcoming book After the Earth Quakes: Elastic Rebound on an

Urban Planet and is a Geotimes corresponding editor.

Links:

"Botched earthquake

prediction," Geotimes Web Extra, Sept. 7, 2004

"Parkfield finally

quakes," Geotimes Web Extra, Sept. 28, 2004

Back to top

If the optimism

regarding earthquake prediction can be attributed to any single cause, it might

be scientists’ burgeoning understanding of the earthquake cycle. The concept

of a cycle dates back to G.K. Gilbert’s pioneering and visionary work in

the late 19th century. Somewhat remarkably, before the association between faults

and earthquakes was clearly understood, Gilbert wrote of a process whereby earthquakes

would release strain in Earth, and would happen again only after sufficient

time had elapsed for the strain to rebuild. Harry Fielding Reid formalized this

concept with the development of elastic rebound theory in the aftermath of the

1906 San Francisco earthquake, which killed 3,500 people.

If the optimism

regarding earthquake prediction can be attributed to any single cause, it might

be scientists’ burgeoning understanding of the earthquake cycle. The concept

of a cycle dates back to G.K. Gilbert’s pioneering and visionary work in

the late 19th century. Somewhat remarkably, before the association between faults

and earthquakes was clearly understood, Gilbert wrote of a process whereby earthquakes

would release strain in Earth, and would happen again only after sufficient

time had elapsed for the strain to rebuild. Harry Fielding Reid formalized this

concept with the development of elastic rebound theory in the aftermath of the

1906 San Francisco earthquake, which killed 3,500 people.